Capstone Project

TECHNOLOGY CATEGORY

Machine Learning / AIINDUSTRY SPONSOR

MicrosoftPROBLEM SPACE

Open Source TechDATE COMPLETED

December 15, 2021

Machine Learning Monitor

Problem

Once a Machine Learning model is deployed into the wild its performance can deteriorate when it encounters new data. ML engineers lack a signal that will tell them how models are performing and when they need to be updated.

Approach

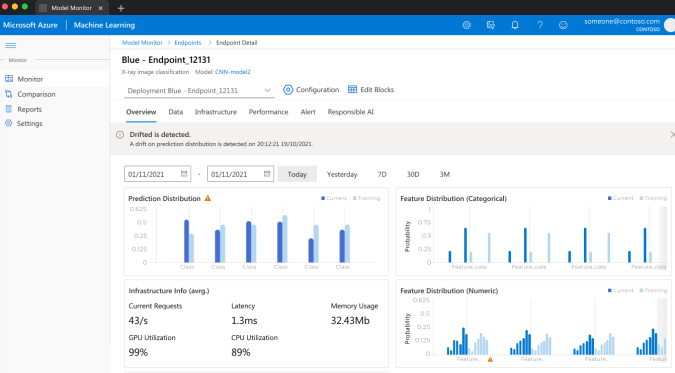

The team created a customer-focused dashboard and performed usability tests and heuristic evaluations with Azure Machine Learning Studio Engineers.

Solution

Users can set up model monitoring jobs at deployment time via config files and commands. A detailed dashboard includes features such as: model performance statistics, model comparison, alert configuration, and report generation.

Team Members

Yun-Cheng (Jerry) Wang

Yanzhang Li

Back to Projects

Back to Projects